Gasgoo Munich- On February 12, Xiaomi CEO Lei Jun revealed on Weibo that the company's robotics team has officially open-sourced Xiaomi-Robotics-0, a 4.7 billion-parameter embodied intelligence VLA model. Built on a Mixture-of-Transformers architecture, the system secured state-of-the-art results across all benchmarks in the LIBERO, CALVIN, and SimplerEnv simulation suites, outperforming 30 competing models

Image Source: Xiaomi Technology

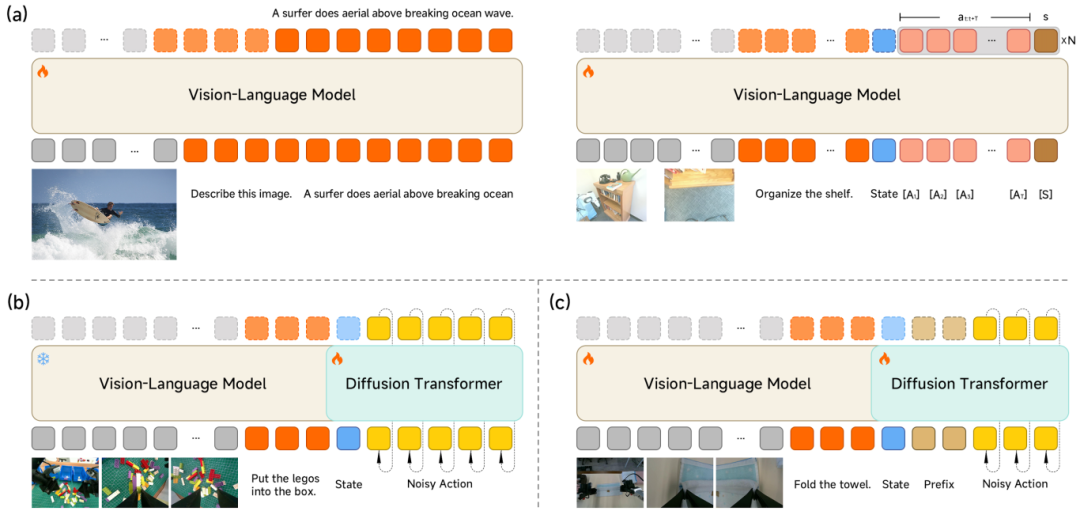

Xiaomi-Robotics-0’s core innovation lies in using its MoT architecture to decouple the Vision-Language Model (VLM) from a multi-layer Diffusion Transformer (DiT). The VLM handles ambiguous instructions and spatial reasoning, while the DiT uses flow matching to generate high-frequency, continuous action chunks. This setup enables real-time inference on consumer-grade GPUs, addressing a common industry pain point where latency in existing VLA models causes robotic movements to stutter or lag.

Model Architecture and Training Methods: (a) VLM multimodal and action hybrid pre-training; (b) DiT specialized pre-training; (c) Target task post-training; Image Source: Xiaomi Technology

The training strategy unfolds in two phases. During cross-modal pre-training, an Action Proposal mechanism compels the VLM to predict multimodal action distributions while processing images, effectively aligning the feature space with the action space. Next, the VLM is frozen while the DiT undergoes specialized training to reconstruct precise action sequences from noise. In the post-training phase, the focus shifts to asynchronous inference, decoupling model reasoning from the robot’s physical execution. Additionally, a Clean Action Prefix ensures trajectory continuity by incorporating inputs from the previous moment, while a Λ-shape Attention Mask prioritizes immediate visual feedback—sharpening the robot’s reflexes when facing environmental disruptions.

In real-world deployment tests, a dual-arm robot powered by the model demonstrated stable hand-eye coordination during long-horizon, high-degree-of-freedom tasks like block disassembly and towel folding. Crucially, it retained the VLM’s native object detection and visual question-answering capabilities. The project’s code, model weights, and technical documentation are now available on GitHub and Hugging Face.