"Every layer of the computing technology stack is being rebuilt."

Under the lights of CES 2026 in Las Vegas, NVIDIA founder Jensen Huang opened with a loaded line. The remark landed like a boulder dropped into a still lake, with ripples quickly spreading across the tech world.

What drew even more attention: the much-anticipated keynote made no mention of new gaming GPUs — the bedrock business that once propelled NVIDIA's rise. That omission stood in stark contrast to the fervent focus on "physical AI" on stage.

This was a clear signal of a carefully orchestrated pivot: NVIDIA's commercial focus is shifting from AI chips to physical AI — and to becoming a computing infrastructure provider for the AI era.

The company that started with graphics processors is placing its biggest AI bet on a more disruptive frontier — AI that not only understands and generates content, but also grasps physical laws, reasons about cause and effect, and acts safely and reliably in the real world.

On stage, Huang went so far as to declare that "the ChatGPT moment for physical AI has arrived." In other words, the historic AI wave is moving beyond cognition and generation into the deeper waters of understanding and action.

A paradigm shift: from cognition to action

Huang set the tone from the start: "About every ten to fifteen years, the computer industry goes through a reset." From mainframes to PCs, then the internet, cloud and mobile — each platform migration has spawned new application ecosystems.

Now, he said, two shifts are happening at once: first, the future is to build applications on top of AI; second, how software is run and developed has fundamentally changed.

He distilled the upheaval into a crisp line: "You no longer 'program' software — you 'train' it. And you don't run it on CPUs anymore; you run it on GPUs."

That points to the end of the era of traditional, precompiled, statically executed software, and the rise of systems that grasp context and generate outputs dynamically from scratch with every interaction. The AI computing stack — the "five-layer cake," as he described it, spanning energy, chips, infrastructure, models and applications — is being reinvented at every tier.

Amid this sweeping rebuild, NVIDIA has locked its strategic radar onto a frontier that reaches beyond text and image generation and into the bedrock of the real world: physical AI. Huang put it plainly: we know large language models aren't the only information type. Wherever the universe has information or structure, we can teach a model to understand it, learn its representations, and turn it into AI. The most important of these is physical AI — AI that understands the laws of nature.

Physical AI carries two crucial meanings: first, giving AI basic commonsense about the physical world — gravity, friction, causality; second, enabling AI to act safely and effectively in the real world based on that commonsense.

It marks a shift in AI's main channel of progress — from "virtual intelligence" that excels at symbols, cognition and generation to "action intelligence" that understands physics and executes in the physical world.

Image source: NVIDIA livestream screenshot

Driving this shift is urgent demand from industry for machine action — and the technical push to break through data bottlenecks.

Take autonomous driving: what a car needs isn't a perfect cloud-generated description of driving, but the ability to make a safe merge decision in milliseconds at a rain-swept intersection at night. In manufacturing, robots must adapt to a part shape they've never seen or a sudden change in assembly order, not just repeat preset paths. All roads point to one thing: AI has to leave the comfort of the virtual and step into the uncertainty of the real.

Training such AI faces a fundamental challenge — instilling common-sense understanding of how the physical world works, like object permanence and cause and effect. Obvious to humans, yet entirely unknown to machines.

To tackle that, Huang argued, we must build a system that lets AI learn the commonsense and rules of the physical world. "Of course you can learn from data — but when data is scarce, you also need to assess whether the AI is effective. That means it has to simulate the environment. If AI can't simulate how the physical world responds to its actions, how does it know whether it did what it intended?"

That calls for more than piling up data. NVIDIA's answer: a basic system composed of three computers — one to train the model, one for inference, and one for physics simulation. This triad underpins all physical AI work. Through deep coordination across these machines, they form an industrial pipeline that moves physical AI from cognition to action, and from virtual to real.

A full-stack engine for physical AI

Declaring a strategic pivot matters; making it real demands a solid technical backbone.

What NVIDIA showed at CES wasn't a single product refresh, but a complete full-stack engine — from base hardware and core software to development paradigms — designed to industrialize physical AI.

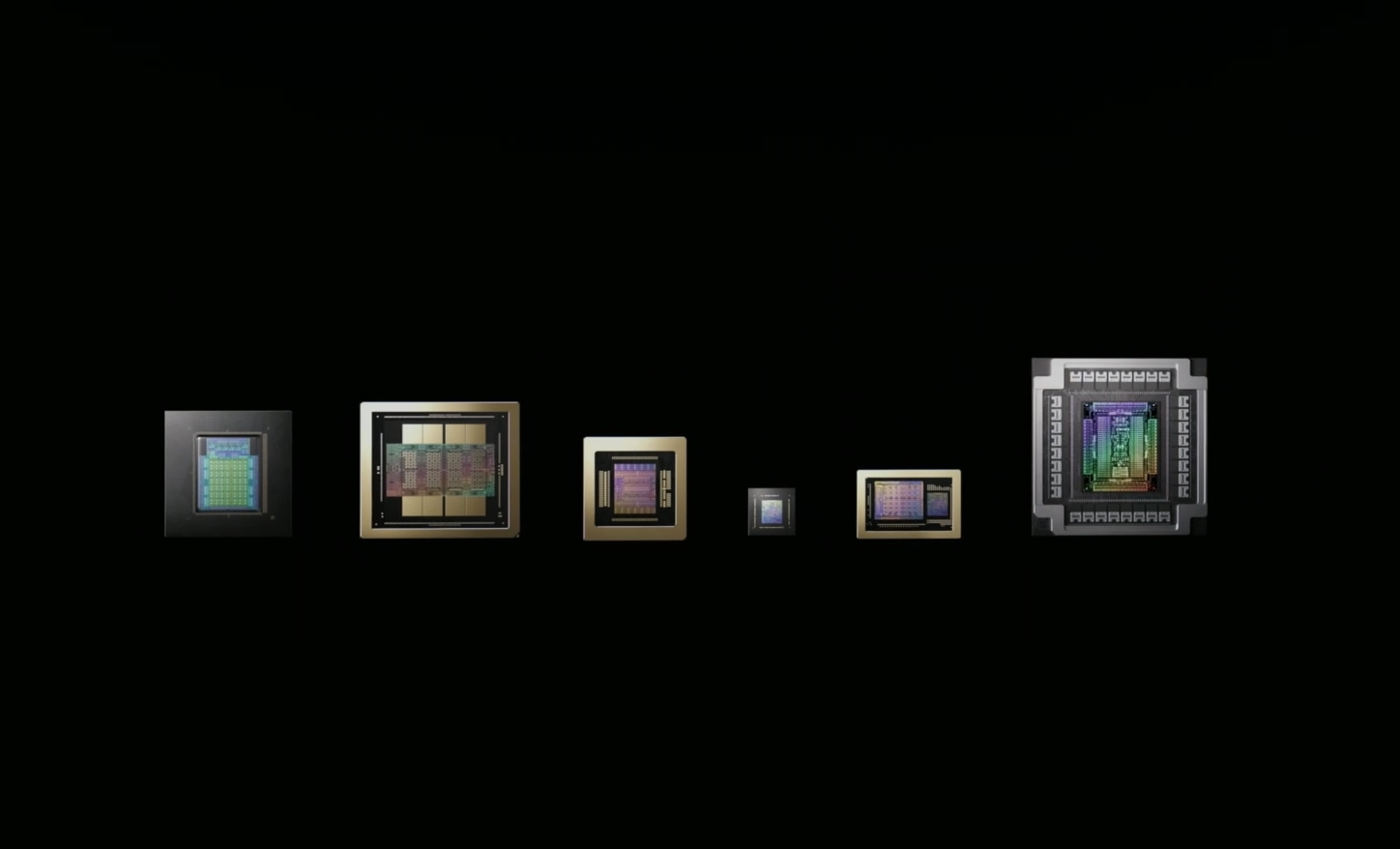

The hardware foundation is a new-generation AI supercomputing platform codenamed "Vera Rubin," named after astronomer Vera Florence Cooper Rubin, who uncovered evidence for dark matter — a nod to NVIDIA's ambition to probe the unknown physical world.

But Rubin's leap isn't just about paper specs versus the prior Blackwell platform — Rubin's inference performance is 5 times higher, training performance 3.5 times higher, and it can cut inference costs by 10 times. The real breakthrough is a shift in architectural philosophy: it's a power engine purpose-built for massive inference on trillion-parameter models.

Image source: NVIDIA livestream screenshot

The platform integrates six classes of chips — Vera CPU, Rubin GPU, NVLink 6 switches, ConnectX-9 SuperNIC, BlueField-4 data processing units and Spectrum-6 Ethernet switches. Beyond tighter hardware integration, chip-level co-design delivers up to 240 TB per second of GPU-to-GPU interconnect bandwidth within a Vera Rubin rack, aiming to systematically resolve compute, networking and storage bottlenecks in long-running AI inference workloads.

At the core sits the Rubin GPU, equipped with a third-generation Transformer Engine, with NVFP4 inference compute reaching 50 PFLOPS.

Huang said Rubin-series chips are now in full production and will roll out in the second half of this year, with key applications in physical AI training, robotics simulation and autonomous driving simulation. The order book has reached USD 300 billion. Early cloud adopters include AWS, Google Cloud and Microsoft.

On the software side, "physical commonsense" is carried by several open-source models, including the Cosmos world foundation model, Alpamayo for autonomous driving AI, and the Isaac GR00TN 1.6 humanoid robot vision-language-action (VLA) model.

Cosmos aims to give robots humanlike reasoning and world-generation capability. Trained on massive video and simulation data, it can learn basic physical laws. According to Huang, Cosmos has been pretrained on large volumes of video, real driving and robotics data, plus 3D simulation — to understand how the world operates.

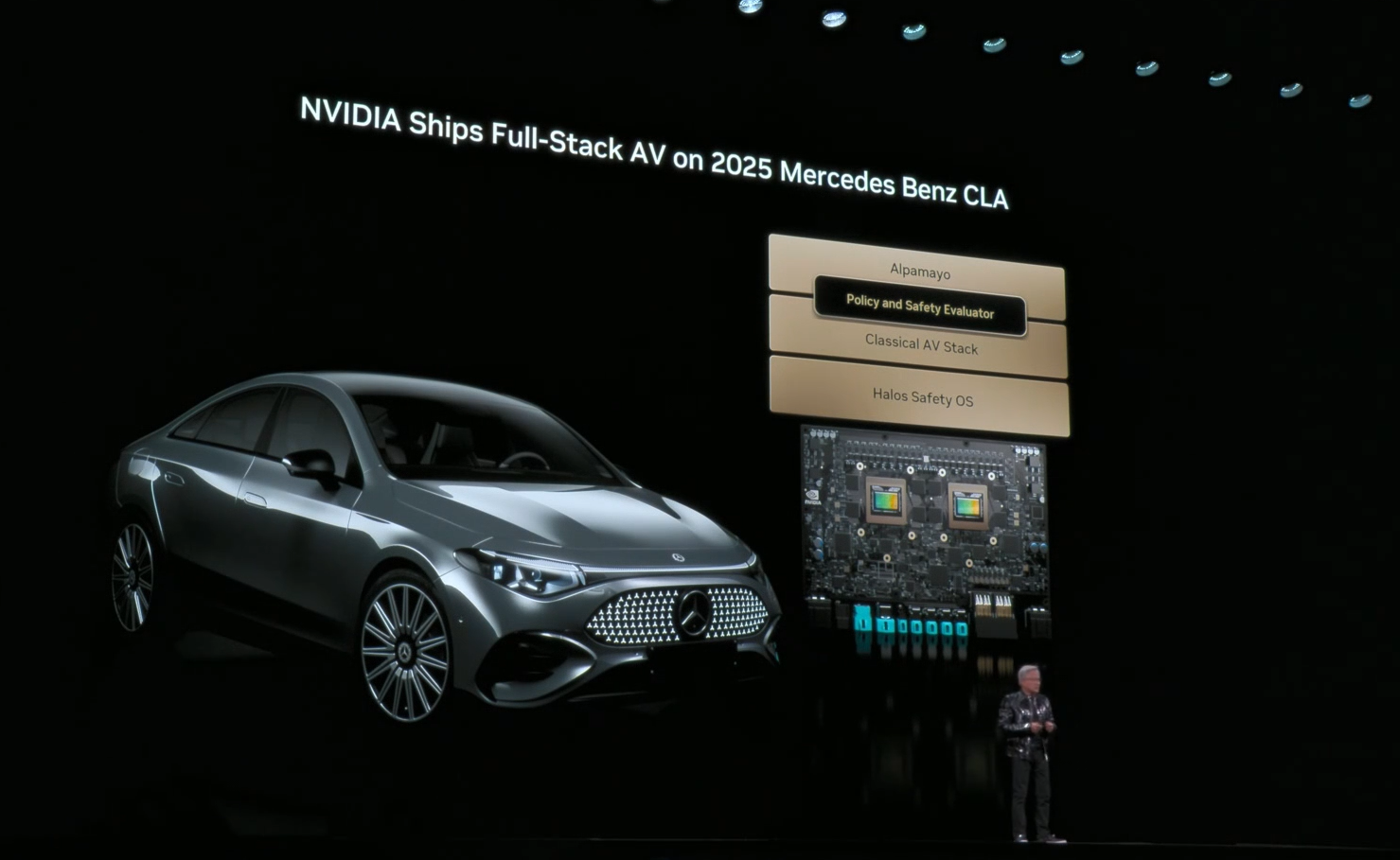

Image source: NVIDIA livestream screenshot

Alpamayo marks a paradigm shift in autonomous driving AI. Billed as the first open-source, large-scale inference VLA model for autonomous driving, Alpamayo's core is chain-of-thought reasoning — grasping causality in complex scenes and explaining its decision logic. Faced with a chaotic construction site or a pedestrian darting into the road, it no longer maps pixels straight to the steering wheel; instead it runs multi-step reasoning like a human: perceive the surroundings, infer intent, assess risk, and generate a decision.

Beyond the Alpamayo open-source AI models, Huang also unveiled AlpaSim — a fully open-source, high-fidelity end-to-end simulation framework for assisted driving — and a diversified, large-scale physical AI open dataset for assisted driving. Together, they form a self-reinforcing development loop for building reasoning-based assisted-driving stacks.

Alpamayo itself isn't a model for direct deployment on vehicles; it serves as a large teacher model for developers to fine-tune and distill, forming the core foundation of a complete assisted-driving stack. Any automaker or research team can build on it.

Huang said the first Mercedes-Benz CLA equipped with NVIDIA's full autonomous driving stack will hit U.S. roads in the first quarter of 2026, with Europe in the second quarter and Asia in the second half. Jaguar Land Rover, Lucid and Uber — along with the autonomous driving research community, including Berkeley DeepDrive — will also lean on Alpamayo to accelerate safe, reasoning-based Level 4 deployments.

Open source, alliances and the standards fight

Armed with a powerful stack, NVIDIA's next move is to build an ecosystem that's hard to dislodge. The strategy blends open source as the spear, alliances as the shield, and platformization as the endgame.

The deeper aim isn't just sharing technology. By open-sourcing core models and toolchains like Cosmos and Alpamayo, NVIDIA is making a calculated commercial play: lower the industry's barriers to the AI era, and define itself as the de facto standards setter.

The logic is straightforward. If physical AI is indeed the next wave, the biggest market won't be a handful of tech giants buying GPUs to train foundation models, but thousands of robotics firms, automakers, logistics operators and agtech companies building and training their own physical AI models.

Against that backdrop, open-source tools can rapidly attract the largest pool of developers into NVIDIA's ecosystem. When engineers worldwide build with CUDA, Omniverse and Cosmos, those tools — and the NVIDIA hardware they rely on — become the "standard kit."

Open source, then, becomes the most efficient way to lock in the ecosystem and spread standards — ultimately to expand dominance at the hardware and platform layers. Notably, NVIDIA isn't just open-sourcing models; it's opening the data used to train them. In autonomous driving, for instance, NVIDIA's physical AI open dataset includes over 1,700 hours of driving data across diverse geographies and conditions, capturing rare and complex real-world edge cases essential for advancing reasoning architectures.

"Because only then can you truly trust how the model is produced," Huang said. In that sense, NVIDIA is also a frontier builder of AI models.

Strategic alliances with industry leaders are the accelerant that turns technical heft and ecosystem momentum into commercial reality.

In his keynote, Huang announced that the Mercedes-Benz CLA will be the first model to feature Alpamayo. Validation from a brand known for rigor and craftsmanship signals more than a purchase order — it's a vote of confidence in the safety, reliability and maturity of NVIDIA's physical AI.

That kind of partnership builds a virtuous loop: flagship customer deployments lower adoption risk for others, drawing more partners into the ecosystem; a richer ecosystem, in turn, boosts the platform's appeal and irreplaceability.

By now, NVIDIA's platform ambitions are clear — moving beyond the role of "AI chip supplier" to become the "definer and provider of full-stack computing infrastructure" for the physical AI age. The offering is a complete chip+model+toolchain solution, so that any company building physical AI applications naturally reaches for NVIDIA's stack — much like developers gravitate to iOS or Android.

The endgame is for "NVIDIA's ecosystem" to become synonymous with "AI development."

The boom in physical AI comes with challenges

The fire NVIDIA lit under physical AI is set to redraw the map across multiple industries.

First up: autonomous driving, robotics and industrial manufacturing. As Huang sees it, the ChatGPT moment for physical AI has arrived — machines are beginning to understand the real world, reason and take action. Robotaxis stand to benefit early, while Alpamayo's reasoning gives vehicles a better shot at handling rare scenarios, driving safely in complex environments and explaining their decisions, laying the groundwork for safe, scalable autonomy.

For robotics, physical AI means robots gain generalized "action intelligence," adapting quickly to new tasks and environments — moving from fixed, custom factory posts into more flexible warehousing, logistics, retail and even home settings. The business model shifts from selling hardware to robotics-as-a-service.

As the competitive battlefield expands, the dimensions of competition become more varied — and more complex.

Where AI competition once revolved around chip performance and efficiency, the physical AI era brings multi-front rivalries: world-model capability — whose foundation model understands physics more deeply and generally; the completeness of the simulation ecosystem — whose virtual world is more faithful, tools more usable and community more active; the real-world data loop — who better turns scarce real-world data into iterative gains in simulation models; and only then the traditional compute race.

NVIDIA's full-stack layout is an attempt to build advantages across all fronts at once.

Even so, the road to physical AI isn't smooth. The core technical hurdle is the "sim-to-real gap" — no matter how fine-grained the simulation, it can't capture every noise and surprise of reality. Ensuring agents trained in virtual worlds transfer safely and seamlessly to the real one remains an open challenge.

And when AI entities act autonomously in the physical world, safety and ethics are magnified. Explainability, fault tolerance and accountability will require new standards and frameworks.

Conclusion

Over the next 3–5 years, expect physical AI to move from tech demos to a critical inflection point in scaled commercial use.

NVIDIA, leveraging sharp strategic foresight, a full-stack technical reserve and savvy ecosystem design, is aiming to evolve from the "picks-and-shovels" seller of the AI rush to the "chief architect" and "rule-setter" of the physical intelligence era.

This shift from cognition to action isn't just about one company's trajectory — it's about redrawing the boundaries of how humans, machines and the physical world interact.